Secure AI at Full Speed: OPAQUE Collaborates with IBM

Secure AI at Full Speed: OPAQUE Collaborates with IBM

Most AI discussions focus on scale, but what about sensitive data? Organizations want to harness AI's capabilities while keeping their critical information secure and private, yet the risk of data leakage is the key obstacle that stalls nearly every initiative. Today, OPAQUE and IBM are solving this challenge together.

Our collaboration brings IBM's new Granite 4.0 models to OPAQUE's Confidential AI platform, creating a powerful combination for running sensitive AI workloads with unmatched security and efficiency. This means organizations can deploy AI on their most valuable data without compromising privacy or performance.

As the trusted leader in Confidential AI, OPAQUE is the only platform that delivers verifiable data privacy and compliance guarantees that unblock AI adoption, crucial for enterprises stalled by data leakage concerns. With OPAQUE, enterprises can accelerate AI into production and deploy AI agents with cryptographic proof that one user's data can never influence or be accessed by another.

Organizations using OPAQUE's Confidential AI platform are seeing real results: 4–5X faster time-to-production by moving faster with verifiable guarantees, 67% reduction in deployment costs through automated compliance and lower infrastructure requirements, and improved accuracy/inference quality from 26% to 99% by unlocking their most sensitive data.

Why Small Models Make a Big Difference: Inside Granite 4.0's Efficiency Breakthrough

For too long, organizations had to choose between powerful AI and practical deployment. Large models demanded expensive hardware and complex infrastructure, while smaller ones often fell short on capability. Granite 4.0 changes this equation.

Here's what makes Granite 4.0 different: it's smart about how it works. It uses a novel efficient hybrid architecture that combines the linear-scaling efficiency of Mamba-2 with the precision of transformers. This means that Granite 4.0 can handle long and complex tasks without hitting the quadratic bottleneck that slows down traditional transformer-based models..

On top of this, some models in the Granite 4.0 family layer in a Mixture of Experts (MoE) routing strategy.Instead of using all of its processing power at once, the MoE approach activates only the necessary “experts”—a small subset of its parameters—as needed.Think of it like a team that brings in exactly the right experts for each task instead of having everyone in every meeting. This allows the Tiny and Small models to scale capability without overwhelming hardware, while the Micro variants provide non-MoE alternatives:

- Granite 4.0 Micro (3B): Dense Transformer

- Granite 4.0 Micro Hybrid (3B): Dense Mamba-2 + Transformer

- Granite 4.0 Tiny (7B, 1B active): MoE with Mamba-2 + Transformer

- Granite 4.0 Small (32B, 9B active): MoE with Mamba-2 + Transformer

Before, During, and After: Verifiable Trust to Innovate Fearlessly

Across the entire AI lifecycle—before, during, and after execution—OPAQUE ensures verifiable data privacy by providing enterprises with provable, end-to-end guarantees. This verifiable chain of trust ensures that AI agents can be deployed on sensitive enterprise data without fear of leakage, compromise, or non-compliance.

- Before execution → Attested: Hardware-attested agents, models, and AI workloads ensure integrity, configuration, and compliance are cryptographically verified.

- During execution → Enforced: Sensitive data stays encrypted in use, with verifiably enforced data privacy and policies at runtime.

- After execution → Audited: Compliance is automated and provable with immutable, hardware-signed audit and attestation reports, which provide cryptographic proof of compliance and continuous oversight of policies enforcement.

The results speak for themselves: faster responses and smoother operations when running multiple tasks. What does this mean in practice? Imagine running multiple AI agents simultaneously for complex workflows, or processing sensitive HR documents without sending them to external servers. Granite 4.0's architecture makes these scenarios not just possible, but practical and cost-effective.

The platform also supports advanced multi-agent scalability, enabling organizations to run multiple fast, specialized AI agents simultaneously across workflows. This capability is crucial for OPAQUE’s Agent Studio, which orchestrates dynamic, compound AI agents securely and efficiently—helping enterprises automate complex, multi-step tasks while maintaining strict data privacy and performance.

When Security Meets Speed: How OPAQUE and Granite 4.0 Work Together

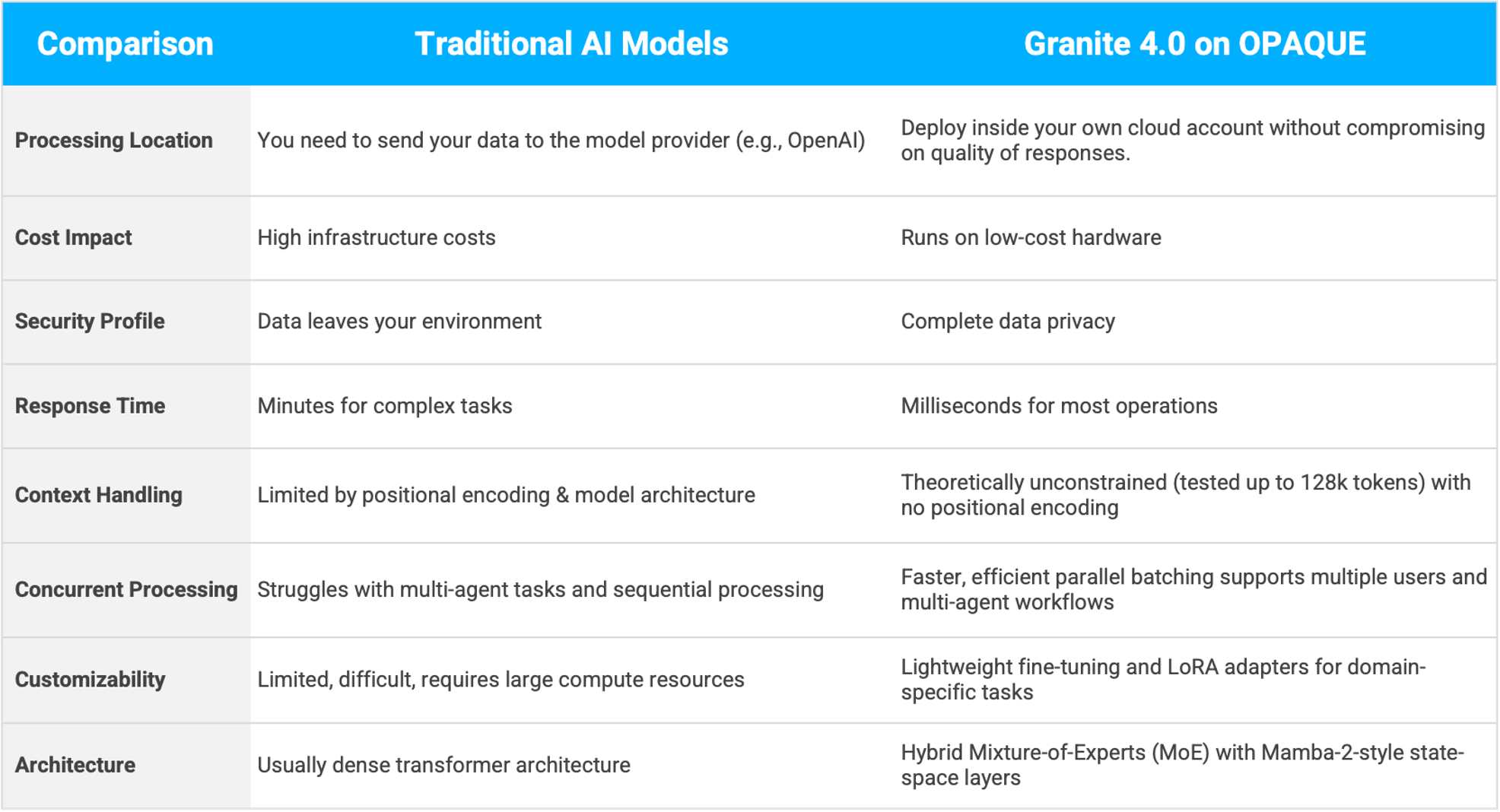

Security and AI usually don't play well together. Most organizations either sacrifice speed for privacy or privacy for speed. OPAQUE changes this dynamic by creating a secure foundation for Granite 4.0 to handle your sensitive data.

We built secure enclaves that protect your AI workloads from start to finish. Data stays safe, models stay protected, and everything runs right where you need it. When you combine this with Granite 4.0, you get responses in milliseconds instead of minutes. No more security trade-offs.

Want to summarize confidential documents? Build a knowledge base from private contracts? Run complex agent workflows on sensitive customer data? Together, OPAQUE and Granite 4.0 let you tackle all these tasks while keeping your data on your terms — private, secure, and fast.

What makes the smaller footprint of Granite 4.0 models on OPAQUE especially powerful is how easily they can be highly customized for any organization's workflow. Customization is just as simple for more complex workflows like agent-based operations or retrieval-augmented generation (RAG).

With lightweight LoRA adapters and fine-tuning toolkits, teams can quickly tailor each model for domain-specific use cases and confidential data types, leveraging open-source flexibility and strict privacy requirements in tandem to help with use cases like highly accurate financial reports, legal contract analysis, employee HR questions answered, or healthcare records processing.

Simple integration with OPAQUE’s platform lets you adapt models with your private data, ensuring your AI is as specialized as your business demands, all without sacrificing security or control—and without the heavy costs of training large models.

This freedom to customize is backed by Granite 4.0’s open-source foundation. The models are released under an Apache 2.0 license, which enables free commercial use and gives developers complete freedom to modify the models as needed. For organizations, this removes significant licensing barriers and provides total control over how these powerful AI tools are adapted and deployed within the secure OPAQUE environment.

Putting It All Together: What You Can Do Right Now

Document summarization used to mean choosing between speed and security. Not anymore. With Granite 4.0 on OPAQUE's platform, your team can quickly digest lengthy contracts, financial reports, and internal memos without sending sensitive details outside your walls.

But that's just the start. Organizations are already using this combination to build private knowledge bases that are truly useful. Their teams can search through years of confidential documents and get instant, relevant answers. By customizing small models like Granite, you can decrease costs by more than 90% compared to massive, state-of-the-art models, while maintaining competitive performance.

Where Granite 4.0’s efficiency really shines is in handling multiple tasks at once. This performance is especially impactful for OPAQUE's Agent Studio, where multiple AI agents can run in parallel with millisecond-level inference. Speed matters when you're running several AI agents together, and that's exactly what we've solved for. Each agent responds in milliseconds, not minutes, and you can tune them specifically for your needs. Want to process HR files while also reviewing contracts? Need to analyze financial data? Each task gets its own customized workflow, and they all run together smoothly, keeping your data secure the whole time.

"Running Granite 4.0 on OPAQUE's Confidential AI platform gives organizations something they've been asking for: the ability to run sophisticated AI workloads on sensitive data without sacrificing security or speed," says Rishabh Poddar, CTO of OPAQUE. "This partnership brings together efficient processing with complete data privacy, opening up new possibilities for secure AI applications across industries."

What's Next for Secure, Efficient AI

Starting October 2nd, Granite 4.0 models will be available on OPAQUE's Confidential AI platform. This collaboration marks a significant shift in how organizations can approach their sensitive AI workloads. No more choosing between security and capability, between privacy and performance. With OPAQUE and IBM working together, you can begin building secure and efficient AI applications today.

OPAQUE delivers the foundation for this shift. Granite 4.0 is powerful on its own—but paired with OPAQUE’s before/during/after model of verifiable guarantees and compliance-ready auditability, it becomes the only safe way to deploy AI agents on sensitive enterprise data with confidence. Trusted by ServiceNow, Accenture, Encore Capital, Anthropic, RiskStream, and Bloomfilter, OPAQUE ensures speed, accuracy, and compliance without compromise.

Ready to run Granite 4.0 securely on OPAQUE's Confidential AI platform? Visit opaque.co to learn more about getting started.