Secure computation has become increasingly popular for protecting the privacy and integrity of data during computation. The reason is that it provides two tremendous advantages.

The first advantage is that it offers “encryption in use” in addition to the already existing “encryption at rest” and “encryption in transit.” The “encryption in use” paradigm is important for security because “encryption at rest” protects that data only when it is in storage and “encryption in transit” protects the data only when it is being communicated over the network, but in both cases the data is exposed during computation, namely, while it is being “used”/ processed at the servers. And that processing window is the time when many data breaches happen, either at the hands of hackers or insider attackers.

The second advantage is that it enables different parties to collaborate by putting their data together for the purpose of learning insights from their aggregate data, without actually sharing their data with each other. The reason is that parties share encrypted data with each other, so no party can see the data of the other party in decrypted form. My students and I call this “sharing without showing.” At the same time, the parties can still run useful functions on the data and release only the computation results. For example, medical organizations can train a disease treatment model over their aggregate patient data without seeing each other’s data. Another example is within a financial institution, such as a bank: data analysts can build models across different branches or teams of the bank that would otherwise not be allowed to share data with each other.

Today, there are two prominent approaches to secure computation:

- A purely cryptographic approach using homomorphic encryption and/or secure multi-party computation

- A hardware security approach using hardware enclaves often combined with cryptographic fortification

I am often asked to compare these two approaches, and to recommend which one is more ready for real-world usage. My students and I have been researching both of these approaches for years, pushing the state-of-the-art in both approaches for years, and publishing our results for both at top security and systems conferences.

It turns out that there is no clear winner between these two approaches: there is a tradeoff between them in terms of security guarantees, performance, and deployment, which I am explaining below. For simple computations, both approaches tend to be efficient, so the choice between these two would be based on deployment and security considerations. However, for complex workloads such as machine learning training and SQL analytics, the purely cryptographic approach is far too inefficient for many real-world deployments; the hardware security approach is currently the only practical approach of the two for such workloads.

MPC: A quick introduction

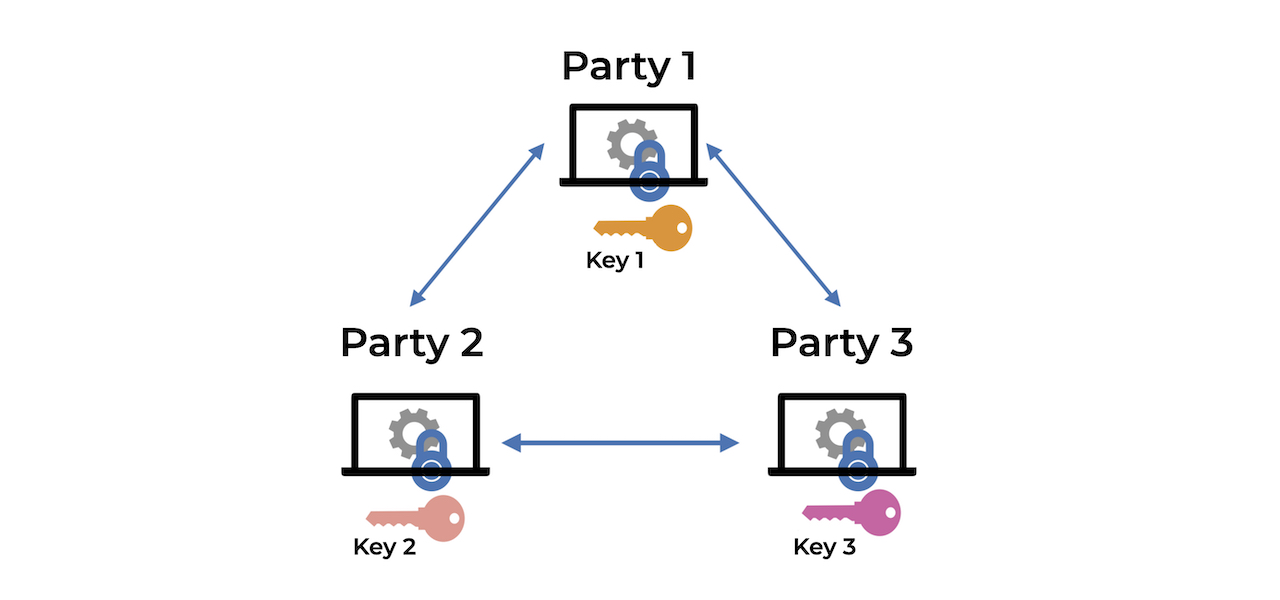

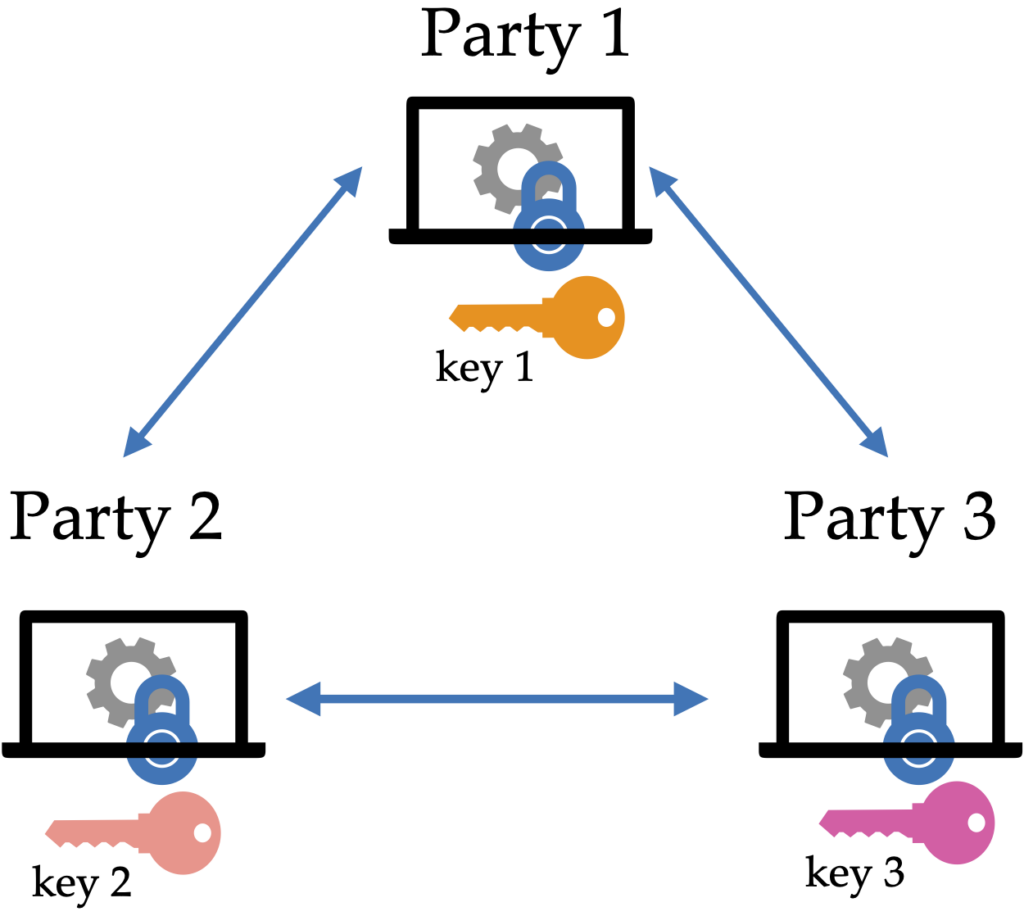

In secure multi-party computation (MPC), N parties having private inputs x_1, …, x_n, compute a function f(x_1, …, x_n) without sharing their inputs with each other. This is a cryptographic protocol at the end of which the parties learn the function result, but in the process, no party learns the input of the other party, beyond what can be inferred from the function result.

There are a bunch of different threat models for computation in MPC with different performance overheads. A natural threat model is to assume that all but one of the participating parties are malicious, so each party need only trust itself. However, this natural threat model comes with implementations that have high overheads because the attacker is quite powerful. To improve performance, people often compromise in the threat model by assuming that a majority of the parties act honestly (and only a minority is malicious).

Also, since the performance overheads often increase with the number of parties, another compromise in some MPC models is to outsource the computation to m < n servers in different trust domains. (For example, some works propose outsourcing the secure computation to two mutually distrustful servers.) This latter model tends to be weaker than threat models where a party only needs to trust itself, so in the rest of this blog, we consider the first two scenarios only, namely maliciously-secure n-party MPC. This also makes the comparison to secure computation via hardware enclaves more consistent, because this second approach, which we discuss next, aims to protect against all parties being malicious.

Hardware enclaves: A quick introduction

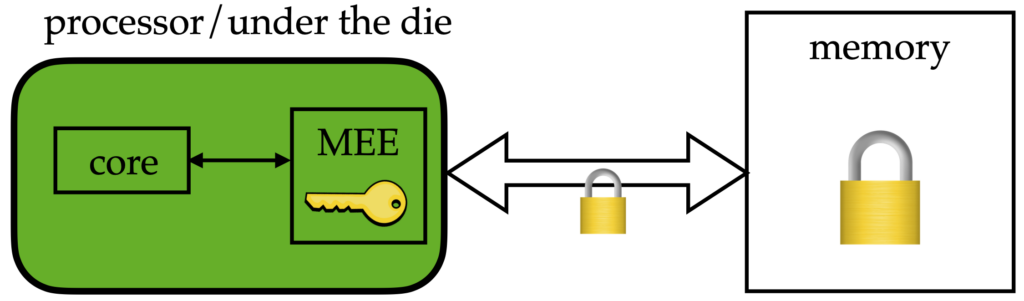

Trusted execution environments such as hardware enclaves aim to protect application’s code and data from all other software in a system.

Via a special hardware unit called Memory Encryption Engine (MEE), hardware enclaves encrypt the data that leaves the processor for main memory, ensuring that even an administrator of a machine with full privilege examining the data in memory sees encrypted data. When encrypted data returns from main memory into the processor, the MEE decrypts the data and the CPU computes on decrypted data. This is what enables the high performance of enclaves in comparison to the purely cryptographic computation: the CPU performs computation on raw data as in regular processing.

At the same time, from the perspective of any software or user accessing the machine, the data looks encrypted at any point in time: the data going into the processor and coming out is always encrypted, giving the illusion that the processor is computing on the encrypted data. Hardware enclaves also provide a very useful feature called remote attestation, using which remote clients can verify code and data loaded in an enclave and establish a secure connection with the enclave using which they can exchange keys.

There are a number of hardware enclave services available today on public clouds such as Intel SGX in Azure, Amazon Nitro Enclaves in AWS, SEV from AMD in Google Cloud, and others.

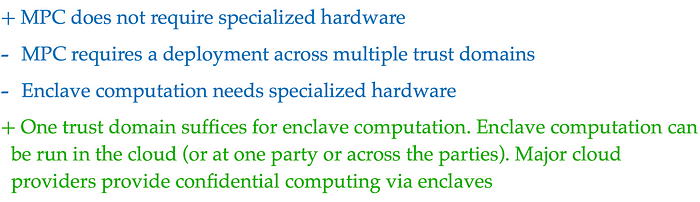

Deployment comparison

With a purely cryptographic approach, there is no need for specialized hardware and special hardware assumptions. At the same time, in a setting like MPC, the parties must be deployed in different trust domains for the security guarantees of MPC to hold. In the threat models we discussed above, participating organizations have to run the cryptographic protocol on premises or on their private clouds, which is often a setup, management, and/or cost burden compared to running the whole computation on a cloud, and can be a deal breaker for some organizations. With homomorphic encryption, in principle, one can run the whole computation in the cloud, but homomorphic encryption does not protect against malicious attackers as MPC and hardware enclaves do—for such protection, one would have to additionally use heavy cryptographic tools such as zero-knowledge proofs.

In contrast, hardware enclaves are now available on major cloud providers such as Azure, AWS, and Google Cloud. Running an enclave collaborative computation is as easy as using one of these cloud services. This also means that to use enclaves, one does not need to purchase specialized hardware, because the major clouds already provide services based on these machines. Of course, if the participating organizations want, they could each deploy enclaves on their premise or private clouds and perform the collaborative computation across the organizations in a distributed manner similarly to MPC. In the rest of this blog post, we assume a cloud-based deployment for hardware enclaves, unless specified otherwise.

Security comparison

By homomorphic encryption here we refer to homomorphic encryption that can compute more complex functions, so either fully homomorphic encryption or leveled homomorphic encryption. There are homomorphic encryption schemes that can perform simple functions efficiently (such as addition or low degree polynomials). As soon as the function becomes more complex, performance degrades significantly.

Homomorphic encryption is a special form of secure computation, where a cloud can compute a function over encrypted data without interacting with the owner of the encrypted data. Homomorphic encryption is a cryptographic tool that can be used as part of an MPC protocol. MPC is more generic and encompasses more cryptographic tools; parties running an MPC protocol often interact with each other over multiple rounds, which affords better performance than restricting to a non-interactive setting.

For general functions, homomorphic encryption is slower than MPC. Also, as discussed, it does not provide malicious security without employing an additional cryptographic tool such as zero knowledge proofs, which can be computationally very expensive.

When an MPC protocol protects against some malicious parties, the protocol protects against any side channel attacks at the servers of those parties as well. In this sense, the threat model for the malicious parties is cleaner than for hardware enclaves’ threat model because it does not matter what attack adversaries mount at their servers, because MPC considers any sort of compromise for these parties. For the honest parties, MPC does not protect against side channel attacks.

In the case of enclaves, attackers can attempt to perform side-channel attacks. A common class of side-channel attacks (which encompasses many different types of side-channel attacks) are those in which an attacker observes which memory locations are accessed, as well as the order and frequency of these accesses. Even though the data at those memory locations is encrypted, seeing the pattern of access can provide confidential information to the attacker. These attacks are called memory-based access patterns attack or access patterns attacks.

My research group as well as other groups have done significant research on protecting against these access pattern side-channel attacks using a cryptographic technique called “data-oblivious computation.” Oblivious computation ensures that the accesses to memory do not reveal any information about the sensitive data being accessed. Intuitively, it transforms the code into a side-channel-free version of the code, similarly to how the Open SSL cryptographic libraries have been hardened.

Oblivious computation protects against a large class of side-channel attacks: side-channel attacks based on cache timing exploiting memory accesses, page faults, branch predictor, memory bus leakage, dirty bit, and others.

Hardware enclaves like Intel SGX are also prone to other side-channel attacks besides access patterns side channels (for example, speculative-execution based attacks, attacks to remote attestation), which are not prevented by oblivious computation. Fortunately, when such attacks are discovered, they are typically patched in a short amount of time by cloud providers such as Azure Confidential Computing and others.

Even if the hardware enclaves would be vulnerable for the time period before the patch, the traditional cloud security layer is designed to prevent attackers from breaking in to be able to mount such a side-channel attack. Subverting this layer as well as being able to set up a side-channel attack in a real system with such protection is typically much harder to do for an attacker because it requires the attacker to succeed at mounting two different and difficult types of attacks. It is not sufficient for the attacker to succeed in attacking only one.

At the time of writing this blog post, we are not aware of any such dual attack having occurred on state-of-the-art public clouds like Azure Confidential Computing. This is why when using hardware enclaves, one assumes that the cloud provider is a well-intended organization, and its security practices are state-of-the-art, as one would expect from major cloud providers today.

Performance comparisons of secure computation methods

Cryptographic computation is efficient enough for running simple computations such as summations, counts, or low degree polynomials. As of the date of this blog post, cryptographic computation remains too slow to run complex functions such as machine learning training or rich data analytics.

Take, for example, training a neural network model. Recent state-of-the-art work on Falcon (2021) estimates that training a moderate size neural network such as VGG16 on datasets like CIFAR10 could range into the years. This also assumes three parties that have an honest majority, so a weaker threat model than the n organizations where n-1 can be malicious.

Now let us take an example with the stronger threat model; our state-of-the-art work on Senate (published at Usenix Security 2021) enables rich SQL data analytics with maliciously-secure MPC. In Senate, we improved the performance of existing MPC protocols by up to 145x. Even with this improvement, Senate can only perform analytics on small databases of tens of thousands of rows, and cannot scale to hundreds of thousands or to millions of rows because the MPC computation runs out of memory and becomes very slow.

We have been making a lot of progress on reducing the memory overheads in our recent work on MAGE (OSDI 2021, best paper) and in another work on employing GPUs for secure computation learning, but the overheads of MPC still remain too high for realistic machine learning training and SQL data analytics. My prediction is that it will still take years until MPC becomes efficient for these workloads.

Some companies claim to run efficiently MPC for rich SQL queries and machine learning training: how come? From our investigation, we noticed that they decrypt a part of the data, or keep a part of the query processing in unencrypted form, which exposes that data and the computation to an attacker—this compromise significantly reduces the privacy guarantee.

Hardware enclaves are far more efficient than cryptographic computation because, as explained, deep down in the processor, the CPU computes on unencrypted data. At the same time, data coming in and out of the processor is in encrypted form, and any software or entity outside of the enclave that examines the data sees it in encrypted form; this creates the effect of computing on encrypted data without the large overheads of MPC or homomorphic encryption.

The overheads of such computation depend a lot on the workload, but for example, we have seen overheads of 20%—2x for many workloads. Adding side-channel protection like oblivious computation can increase the overhead, but overall the performance of secure computation using enclaves still remains much better than MPC/homomorphic encryption for many realistic SQL analytics and machine learning workloads. The amount of overhead from side channel protection via oblivious computation varies based on the workload—from adding almost no overhead for workloads that are close to being oblivious to 10x overhead for some workloads.

MC² open source

If the reader would like to experiment with secure computation, either via MPC or hardware enclaves, my students and I have pushed a lot of our projects into open source, in our MC² framework. MC² stands for multi-party confidential computing, and enables the reader to experiment with both approaches in secure computation and their tradeoffs. Here are some of the projects we included in MC²:

- MPC projects: Cerebro (for machine learning), Delphi (for neural network prediction against semi-honest attackers), and Muse (for neural network prediction against malicious users)

- Hardware enclave projects: Secure XGBoost (for decision trees training and inference), and Opaque SQL (for rich SQL analytics via Spark SQL)

Conclusions

Secure computation via MPC/homomorphic encryption versus hardware enclaves presents tradeoffs with regards to deployment, security, and performance. Regarding performance, it matters a lot what workload one has in mind. For simple workloads (such as simple summations, low degree polynomials, or simple cases of machine learning prediction), both approaches are ready to use in practice, but for rich computations such as complex SQL analytics or machine learning training, only the hardware enclave approach is at this moment practical enough for many real-world deployment scenarios.